Childhood is a time of joy, exploration, and growth.

But for too many children, that joy is taken away by

sexual abuse.

When sexual abuse is documented with photos and videos and shared with others, the cycle of harm continues.

This content is known as Child Sexual Abuse Material (CSAM).

CSAM used to be distributed through the mail. By the ‘90s, investigative strategies had nearly eliminated the trade of CSAM.

And then, the internet happened.

In 2004, there were

450,000

files of suspected CSAM reported.

In 2022, there were over

87 Million

files of suspected CSAM reported.

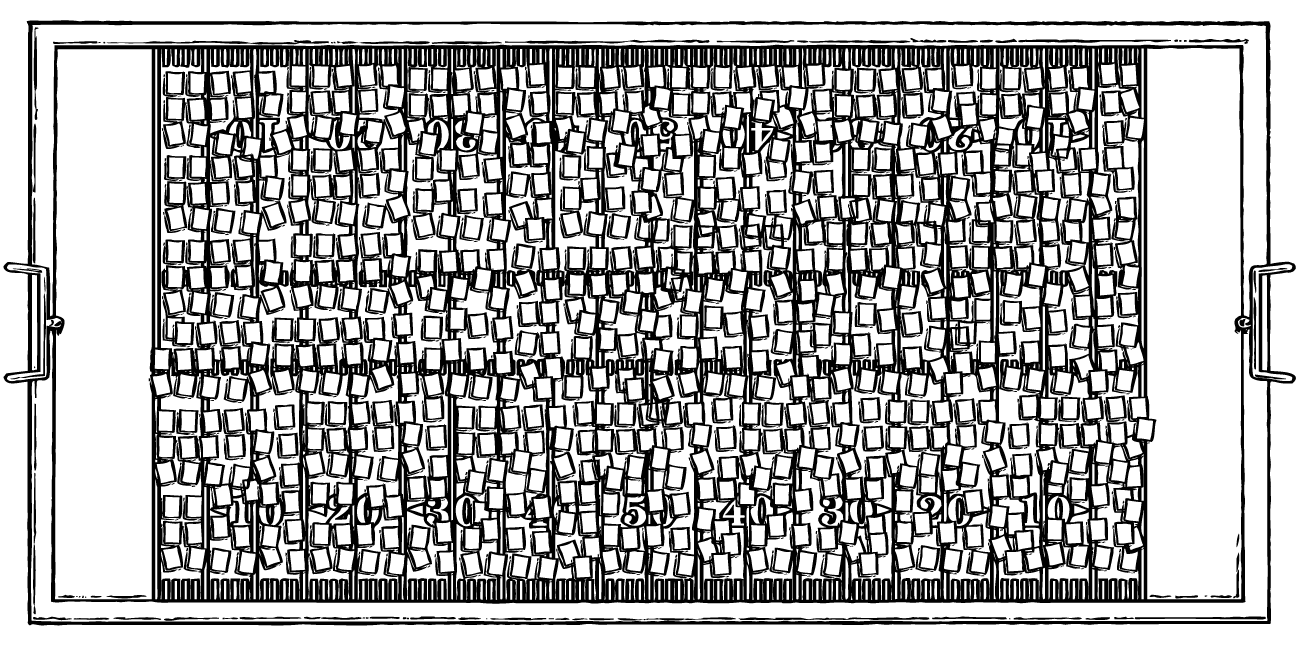

If each of the 87 million files of suspected CSAM reported to NCMEC was represented by a letter-sized sheet of paper, they would cover the surface of an American football field.... 980 times.

And those are just the files that have been found.

The intersection of child abuse and technology has created a public health crisis.

How have all these files been created?

Bad actors are using technology to:

Take advantage of a child’s social development online

Take photos and videos of child sexual abuse

Build communities to collect, share, and trade abuse materials, as well as share tips and encourage more violent abuse

Share CSAM broadly across the internet

Find children to groom for hands-on sexual abuse

Solicit children for nude selfies or sexualized imagery

Blackmail for financial gain (sextortion)

Generate child sexual abuse material or scale grooming using generative AI technology

To better understand the proliferation of child sexual abuse material online and how we can stop it, let’s explore the main pathways of abuse that lead to the creation of CSAM.

Hands-On Sexual Abuse

Hands-on sexual abuse occurs with physical contact between a child and an abuser.

Because the abuser needs physical access to the child, this abuse is often initiated by a trusted adult — like a parent, relative, neighbor, coach, pastor, or babysitter.

After documenting the abuse and creating CSAM, the abuser shares it in online communities dedicated to the sexual abuse of children.

Like any community, the members support each other. But this support is shared around how to gain access to children, abuse them, and spread the images or videos.

When the perpetrator has this type of access the abuse can happen often and for an extended period of time: weeks, months, and even years. And with each additional day of abuse, new images are created and shared. These images are then collected, traded, and shared across the internet.

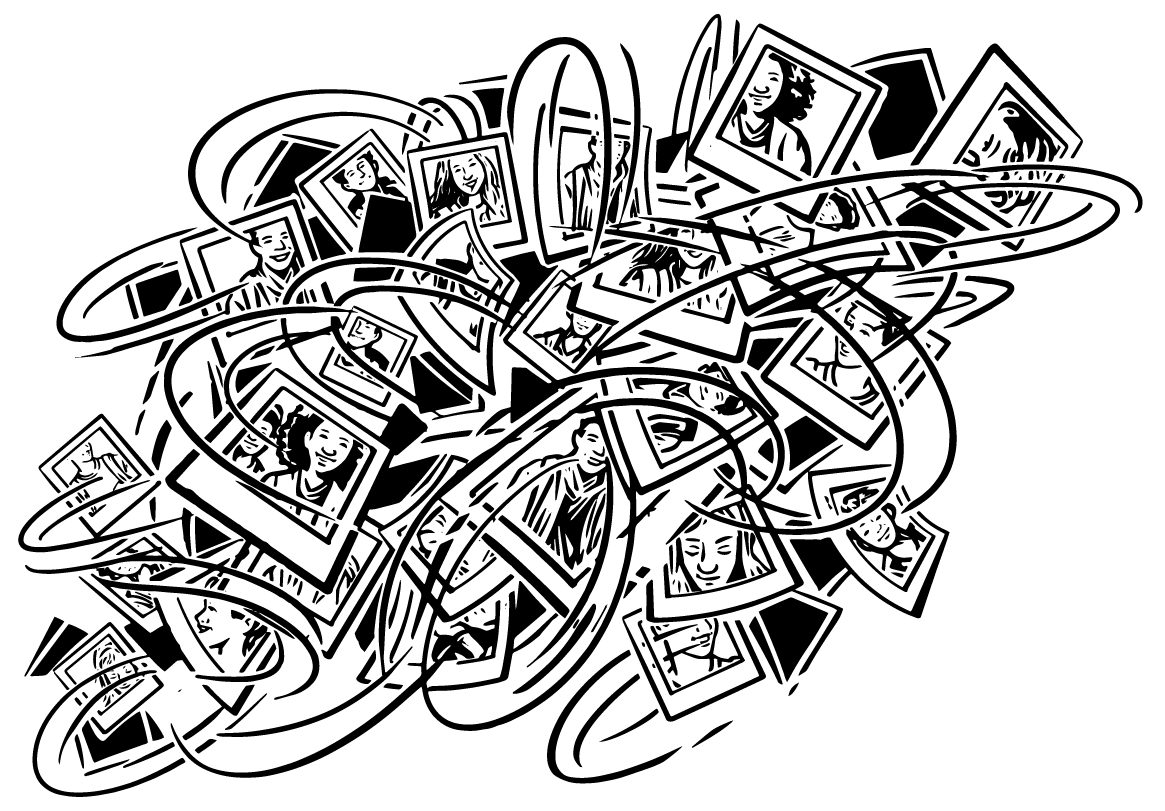

There are survivors of CSAM whose abuse has been shared thousands and tens of thousands of times a year, even many years after being recovered from hands-on abuse. Each time this image is shared, the victim is abused again.

Over half of the identified child victims in widely circulated CSAM are prepubescent, and many are even too young to speak.

Visit our Research Center to learn more about the severity of CSAM and victim demographics.

Grooming

Making new friends often starts the same way: Finding something in common. Telling personal stories. Sharing a secret and creating a bond.

We all do this to build trust and connection. For many youth today, these new friendships are built entirely online.

“I think it is normal for people to connect with people online that they haven’t met in person because social media is such a big part of people’s [lives] that people will start to meet people online and form bonds.”

These online spaces can be critical communities of support, especially for LGBTQ+ youth and other marginalized groups.

But what happens when some new friends aren’t who they appear to be?

When a “friend” is actually an adult building a relationship with a child by pretending to be someone else, this is a form of grooming.

Online grooming is a process of exploiting trust to shift expectations of what is safe behavior. Groomers leverage fear and shame to keep a child silent.

In a recent report, Thorn discovered that

nearly half of all kids (40%)

have been approached by someone who they thought was attempting to “befriend and manipulate” them. Read report

Percentage of minors who have received a cold solicitation online for a nude photo or video.

Online perpetrators are able to take advantage of technology to scale their efforts to reach a large number of children at the same time.

Because abusers go wherever kids already are (like the platforms they use every day), grooming can happen just about anywhere online — quickly or over time.

Report

Among kids who have shared nudes, 42% reported they had shared nude imagery with someone aged 18 or older.

See More ResearchAfter meeting minors on a platform, groomers frequently move victims to another platform to increase their security and the victims’ isolation.

What do these abusers want?

Children are groomed for many reasons:

- As a source of self-generated child sexual abuse material (aka nudes)

- To lead to an in-person meetup with the opportunity for hands-on sexual abuse

- For the chance to gain power and control (sextortion)

Learn how we’re addressing grooming

Sextortion

A young person might choose to share an intimate photo or video for many reasons. In most cases, they believe the photo is private and will stay between them and the receiver. Unfortunately, that’s rarely the case.

Sometimes, a perpetrator even threatens to expose these sexual images if a victim doesn't give them something they want.

This is called sextortion.

Sextortion is the threat to expose sexual images if a victim does not give an offender what they want. Sextortion can happen in various ways, involving people known offline, like ex-partners, or new online contacts. Recently, there's been a sharp increase in online perpetrators sextorting minors for money. In 2022, NCMEC reported that 79% of sextortion cases reported to them were financially motivated.

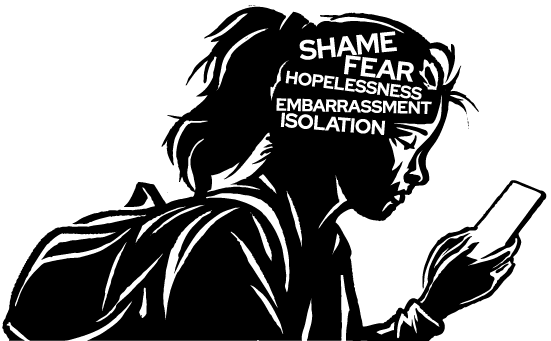

Kids experiencing sextortion often believe it’s their fault. Rather than recognizing they are victims of abuse, youth often feel ashamed and embarrassed—feelings that perpetrators take advantage of to keep them quiet as they continue to carry out their threats.

85%

of young adults and teens said embarrassment kept them from getting help. Read report

Sextortion can happen on any platform with messaging like social media, dating apps, gaming sites, and texting apps.

Technology enables perpetrators to groom and sextort at scale.

Between 2021 and 2023, reports of online enticement, the category that includes sextortion to the CyberTipline

more than tripled.

Learn how we’re addressing sextortion

AI-Generated CSAM (AIG-CSAM)

The possibility of what generative AI can do is endless. Many are finding ways to work smarter, improve health, and create art.

Some are exploiting generative AI to abuse children.

Because open-source generative AI tools can be modified, used, and redistributed by anyone, abusers are already using them to scale all online sexual harms. How?

Abusers can take one existing CSAM image and turn it into hundreds, with AI software positioning the child victim in different scenes or depicting them being harmed in new ways.

Bad actors can also take any publicly available images of children and create AI-generated CSAM with their likeness, also known as “deepfakes.” These images can then be used for grooming or sextortion.

While the child safety ecosystem is just beginning to understand and analyze these harms, we do know that now is the time for safety by design.

AI companies must lead the way to ensure children are protected as generative AI tech is built.

Learn how we’re addressing AIG-CSAM

- Driving Safety by Design practices within the generative AI industry to prevent the misuse of this technology to perpetrate sexual harms against children.

- Researching the impact of generative AI on child safety

Sexting & Non-Consensual Intimate Imagery

Today’s children grow up with near-constant access to phones and devices. Socializing online is now a normal part of growing up, and that includes exploring relationships, bodies, and sexuality in a digital world.

Sending naked photos, sharing photos of others, and being asked for nude photos is increasingly common.

Sharing nudes can be the result of exploration and natural curiosity.

Or it can be the result of pressure, grooming and exploitation.

47%

of kids said they felt positive feelings immediately after sharing their nude image or video.

36%

of kids who have shared SG-CSAM said they felt negatively (shame, guilt, or uncomfortable) after sharing a nude photo or image of themselves.

Whether the minor was coerced or willingly shared, these types of photos or videos are considered self-generated child sexual abuse material due to the abuser’s physical absence in the visual content.

SG-CSAM poses a variety of dangers. We’ve found that the most harm occurs when content is reshared without consent.

Once it’s reshared, SG-CSAM is usually shared again and again, and is at risk of going viral through the child’s community and beyond.

This can result in victim-blaming. As if that’s not enough, images and videos may escape onto the open web and become traded CSAM.

Learn how we’re addressing SG-CSAM

Where do we go from here?

This issue is incredibly complex and constantly changing. The advancement of technology isn’t slowing down, but neither are we. At Thorn, we know building innovative technology works to defend children from sexual abuse.

That’s why we develop tools to equip tech platforms to detect CSAM, empower parents and youth to prevent abuse, help law enforcement find children faster, conduct novel research to move the entire child safety ecosystem forward, and work with lawmakers globally to influence policy.

But we can’t do this alone.