Thorn Research: Understanding sexually explicit images, self-produced by children

December 9, 2020

7 Minute Read

We’ve been hearing increasing concerns from our partners on the front lines about child sexual abuse material (CSAM) where there was no obvious abuser in the image. These images are referred to as self-generated images, or self-generated CSAM (SG-CSAM).

Self-generated abuse material, or SG-CSAM, is a complex challenge and represents a variety of experiences, risks, and harms for kids. Some images represent a child who is being groomed and coerced, their trust and vulnerabilities being intentionally exploited. Still others represent a teen “sharing nudes” with their partner, memorializing an act of romance and sexual exploration.

But irrespective of the origin story for these images, in the hands of online child sexual offenders, they represent a real and serious risk to the child depicted, and to other children worldwide. Investigators now report these images are common and growing in their caseload. According to a NetClean report from 2018, 90% of police officers investigating online child sexual abuse (CSA) said it was common or very common to find self-generated sexual content during their investigations.

Thorn’s knowledge of emerging trends and tactics draws from our work, and directly from stakeholders around the globe: investigators, NGOs, advocates, and survivors to name a few. That’s how we heard about the rise in Sextortion, that’s how we got the idea for Spotlight by hearing directly from survivor voices, and that’s how we learned about self-generated child sexual abuse material (SG-CSAM).

The growth of the problem regarding SG-CSAM was clear, but the right interventions were not. Painting these trends with too broad a brush oversimplifies important details and runs the risk of introducing harms that did not previously exist.

If we are going to build the right interventions and programs that support resilient youth, we need to understand and appreciate the breadth and diversity of their experiences. Our work to date has helped us understand how to protect kids from online sexual exploitation, but with SG-CSAM a new question arose: Is every child in every image experiencing the same harms?

The short answer is no. A young person sharing nudes with their partner has not had the same experience as a child being groomed for exploitation, and the interventions to safeguard both of these children are distinct.

To prevent and combat harm, we must look at the motivations leading to, and the experiences stemming from, an image being taken. Who is taking the picture? What led them to take it? How do they feel after taking it and what do they want to do with that image?

These questions led us to our latest research, digging into this full range of experiences. And in this work, the voices of youth are our north star.

The result is our latest research on the attitudes and experiences surrounding the sharing of self-generated intimate images by youth, often referred to as “sharing nudes” or “sexting.”

Digging deeper

Thorn’s research is centered in the question: Where does harm occur at the intersection of child sexual abuse and technology, and how can that harm be prevented, combatted, and eliminated?

However, as we sought to better understand the rise in these reports, we struggled to find research that represented up-to-date attitudes in current digital ecosystems. Given the pace at which technology and platforms change, this is a critical component in delivering strategic interventions.

Consider how things have changed in recent history: 10 years ago, there was no TikTok, Instagram was about to launch, and smartphones were still only owned by about 30% of U.S. adults, compared to 81% today.

Our research methodology lends itself to capturing trends on the front lines of digital adoption. A nimble approach is needed to understand what’s happening to children right now, and that’s what we created for this research. Building off of techniques and strategies used for our Sextortion research, these findings are designed to deliver insights about children today.

That’s why we used social research methodologies to collect this data, ensuring it reflects the current landscape. And we went straight to the source, talking to over 1,000 kids aged 9-17, using focus groups and a nationally representative survey.

Equipped with the most current data and the voices of kids growing up in a digital reality our goal is to share our findings back with the ecosystem of partners who serve kids and advance the public conversation around reducing the potential harms of SG-CSAM to youth.

What we learned: 3 critical themes

This research was just the first step as we look to deepen our understanding of how and why youth are sharing self-generated sexual content. Here are three key learnings that provide us with a starting point:

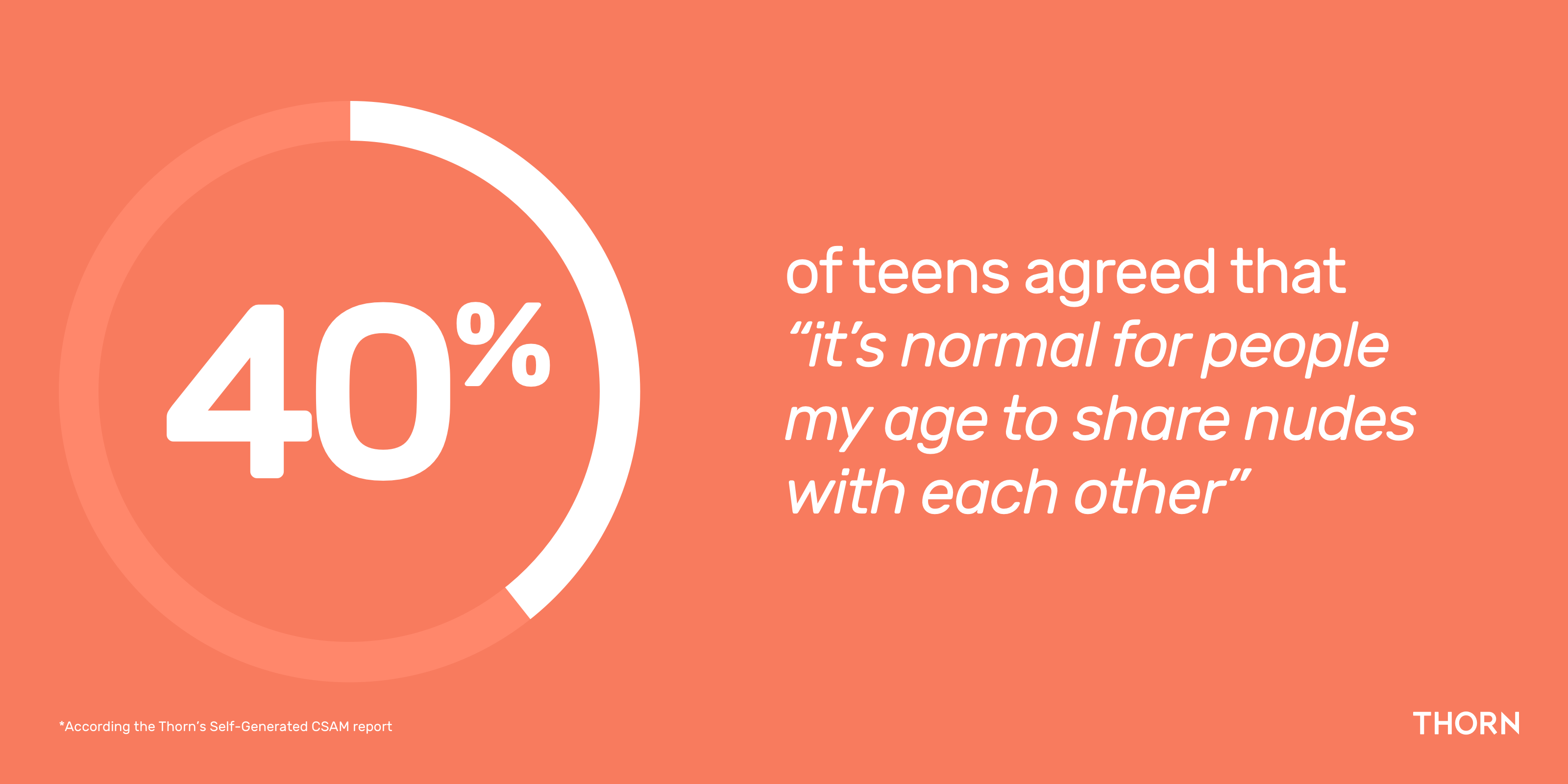

1. Sharing intimate imagery through sexting or sending nudes is increasingly common among young people. And kids don’t view it as fundamentally bad.

As one survey respondent noted, ““We all do it. It is ok.”

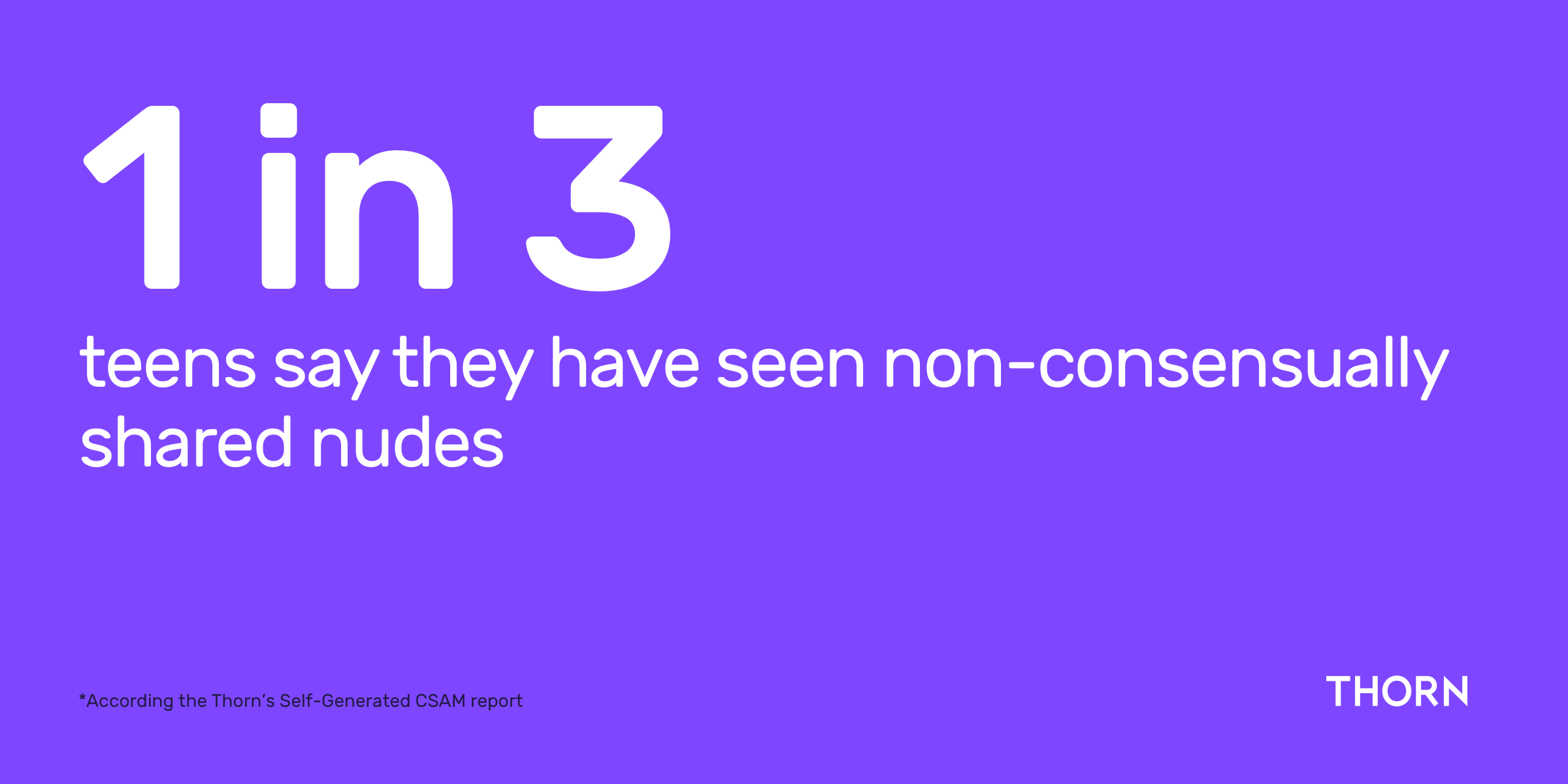

2. When a nude is shared beyond its original intended recipient, it changes the stakes radically, and that is happening at an alarming rate.

The sharing of these images is becoming increasingly common, often seen as a normal part of modern intimate behavior and development among youth communities. However, once that content is created, the potential for resharing exists immediately. We’ve found that very often the point of most harm occurs when content is reshared without consent.

That’s a major concern when nearly 1 in 3 teens say they have seen non-consensually shared nudes, which is very likely an underreported number considering the sensitivity of the topic and possibility of consequences.

And the vast majority of kids are navigating this digital landscape, the risks it presents, and the generation of sexual content with little or no guidance from adults.

3. A culture of victim-blaming may make it harder for children to seek help when things go wrong.

Whether through personal experience or word of mouth among friends, kids anticipate being blamed first, and possibly only, if their nudes are leaked.

More than half of teens either exclusively or predominantly blame the person in the image if their images are leaked. Caregivers share similar sentiment, with 50% of female caregivers and 60% of male caregivers exclusively or predominantly blaming the young person whose image was leaked.

It isn’t that caregivers are blind to the threat of sexting – more than half believe nudes are being shared at their child’s school – but “the talk” they tend to have with their kids relies on the deterring effect of dire consequences rather than opening a dialogue of resilience.

There is still much more to learn, and much more to talk about—we’ll be sharing a fuller readout of the findings of this research soon. Subscribe to our email updates here and follow us on social media to stay up to date.

But this is a starting point.

Youth voices are key to understanding the issue

What we do know is that kids are facing tough choices online. Peer pressure and the normal challenges of coming of age are becoming increasingly digital, and that can be difficult for adults to understand and relate to. Add to the mix school shutdowns due to COVID and more time spent online in general, and children are being faced with more opportunities to make more choices online.

Some behaviors that are alarming to caregivers are increasingly normative to kids. But even normative behaviors can carry significant risks. How can parents identify areas of risk and educate their kids?

The only way to understand the risks our children face, and empower them to make good choices, is to talk to them. Share their experiences, spend time gaming with them, ask about their online friends, ask them to teach you how to use their favorite apps.

Hear their voices.

An open channel of communication to kids is a tool that can support them throughout their growth — online and beyond.

We’re all learning about this together. We will keep learning and sharing, and in partnership with those on the front lines in industry, law enforcement, and direct-service organizations, we will continue to build tools to protect kids in an ever-changing world.

Related Articles

Stay up to date

Want Thorn articles and news delivered directly to your inbox? Subscribe to our newsletter.